Patients AI Bill of Rights - The Foundation

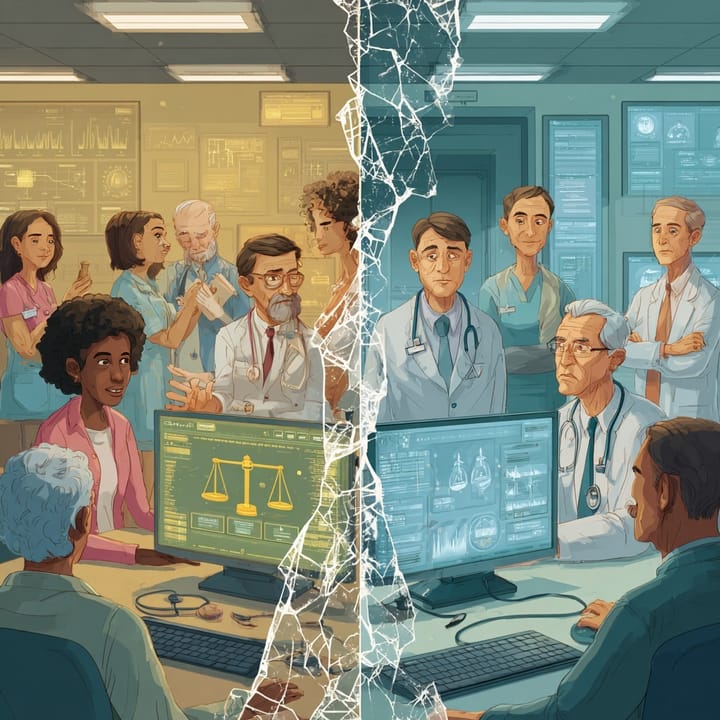

The concepts of creating a patient's bill of rights, including a respect for persons, beneficence, and justice, as outlined in the Belmont Report.

Executive Summary

With the rapid development and adoption of AI technologies, I'm finding that patients are seldom part of the discussion. Since being diagnosed with a chronic medical condition, I have become a foster child of medicine and hospital administration. I am a certified Patient Leader, volunteering weekly at my local hospital. I am a member of HIMSS and hold certifications from Stanford University School of Medicine and Johns Hopkins for my expertise in healthcare AI. In other words, I see healthcare AI from a very unique perspective. So while there are reports about a Healthcare Bill of Rights from some sources, for me, it is personal.

Frankly, the application of AI technologies could have saved me from a medical error that nearly cost me my life. So when I talk about a Patient's AI Bill of Rights, I see the necessity and opportunity to create a discussion with patients that can strengthen the doctor-patient relationship in new ways that can improve care and communication.

This report examines the enduring relevance of the Belmont Report's ethical principles: Respect for Persons, Beneficence, and Justice as foundational guiding principles for the responsible development and deployment of Artificial Intelligence (AI) in healthcare.

Beyond the Algorithm: Forging a Patient-Centric Future with an AI Bill of Rights

Artificial Intelligence is not on the horizon; it’s in our clinics, our diagnostic labs, and our administrative offices. AI is rapidly transforming the very landscape of medicine, offering a future of incredible precision, efficiency, and personalized care. From analyzing vast datasets to detect illness earlier to automating routine tasks, its potential to enhance global health outcomes is immense.

However, with this great promise comes a profound responsibility. The integration of AI introduces complex ethical challenges, including patient privacy, algorithmic bias, and the "black box" problem, where AI's decision-making process is dangerously opaque. This lack of transparency is a direct threat to patient trust—the very bedrock of healthcare.

To navigate this new frontier, we don't need to invent a new moral compass. We need to adapt a proven one. The 1979 Belmont Report, with its core principles of Respect for Persons, Beneficence, and Justice, provides the essential ethical framework to guide us. My analysis translates these foundational duties into a clear, actionable

AI Patients' Bill of Rights designed to empower patients and guide responsible innovation.

The First Principle: Respect for Persons

Core Tenet: Treat individuals as autonomous agents and protect those with diminished autonomy.

In the age of AI, this principle is tested in new and critical ways. Respect is not passive; it is an active acknowledgment of a patient's right to make informed decisions about their own body and data. The primary challenge is the "black box" nature of many AI systems, which fundamentally conflicts with true informed consent. If a patient cannot understand how an AI uses their data to arrive at a recommendation, their consent is compromised.

This leads to a core vulnerability:

AI paternalism, where an algorithm prioritizes goals that may not align with a patient's personal values, stripping them of control and agency. Furthermore, we must recognize that AI creates new categories of vulnerable populations—not just children or the elderly, but those on the wrong side of the digital divide, who may lack the technological literacy to navigate this new landscape.

The Second Principle: Beneficence & Non-Maleficence

Core Tenets: Maximize benefits and, above all, do no harm.

The potential benefits of AI are staggering. It can enhance diagnoses, personalize treatments, improve healthcare access in underserved areas, and increase operational efficiency, freeing clinicians to focus on complex patient needs. Emerging applications can even accelerate mRNA treatment development and transform immune cells into cancer killers.

But these benefits cannot come at the cost of patient safety. The risks are significant: algorithmic errors, data breaches, and unintended consequences that can exacerbate health disparities. To honor the principle of "do no harm," we must move beyond reactive fixes. A robust, risk-based framework is essential, where high-risk AI tools (like those in autonomous surgery) undergo the most stringent testing, monitoring, and oversight.

Crucially, AI must always augment, not replace, human clinical judgment. The "human-in-the-loop" is not just for accountability; it is a critical fail-safe to catch the errors and biases that automated systems will inevitably miss, ensuring we uphold our most sacred oath.

The Third Principle: Justice

Core Tenet: Ensure the fair distribution of benefits and risks. Perhaps the greatest danger of improperly governed AI is its potential to amplify and entrench existing societal biases.

Algorithmic bias is not a technical glitch; it is a reflection of systemic inequity encoded into logic. When AI is trained on non-representative data, it produces skewed and unfair results.

We've already seen this happen. Skin cancer algorithms perform less accurately on darker skin tones because of biased training data. An algorithm designed to predict healthcare needs assigned lower risk scores to Black patients because it used cost of care as a proxy for sickness, failing to account for the fact that less money is historically spent on their care due to systemic barriers. This is not just bad data; it is injustice, scaled by technology.

Mitigating this requires a multi-faceted approach: intentionally inclusive data collection, regular bias audits, multi-stakeholder review boards, and a commitment to Explainable AI (XAI). While AI can be a tool to reduce disparities by identifying and targeting interventions, this outcome is not automatic. Justice must be by design.

A Proposed AI Patients' Bill of Rights

Synthesizing these principles, I propose the following rights as the cornerstone of patient-centric healthcare AI. This is not a checklist but an integrated framework where each right reinforces the others, creating a comprehensive safety net for patients.

- The Right to Informed Consent for AI Use: You must be told when AI is being used in your care and must consent to it.

- The Right to Understand AI's Role: You have the right to a clear, plain-language explanation of what the AI does, its limitations, and how it uses your data.

- The Right to Data Privacy and Security: Your health data must be protected with robust security, and you have the right to know how it is collected and used.

- The Right to Refuse AI-Supported Care: You retain the right to opt-out of AI-driven diagnosis or treatment without penalty.

- The Right to Human Oversight and Intervention: An accountable human professional must always be in the loop to review and override AI-generated recommendations.

- The Right to Safety from AI Harms: AI tools must be rigorously tested, monitored, and proven safe and effective.

- The Right to Equitable AI Treatment: You have the right to care from AI systems that are free from bias and have been designed to ensure equitable outcomes for all populations.

- The Right to Accountability: In the event of an error, there must be a clear and transparent process to determine responsibility.

- The Right to Control Your Data: You must have meaningful control over how your personal health information is used for AI development and deployment.

- The Right to Access for All: AI technologies must be designed and deployed in a way that is accessible to all, including vulnerable populations and those with limited digital literacy.

The Path Forward

Implementing this vision requires a shared commitment.

- Policymakers must develop agile, adaptive regulatory frameworks that keep pace with technology, moving beyond a one-size-fits-all approach.

- Developers and Institutions must collaborate on everything from building diverse datasets to engaging patients in the co-design of AI systems.

- Healthcare Providers must embrace a new role as skilled evaluators and interpreters of AI tools, with continuous education on the ethical implications being mandatory.

Ethical AI is not a destination; it is an ongoing process of vigilance, adaptation, and unwavering commitment. By championing this AI Patients' Bill of Rights, we can ensure that technology serves humanity, building a future where innovation enhances care while preserving our most fundamental human values.

About Dan Noyes

Dan Noyes operates at the intersection of healthcare AI strategy and governance. After 25 years leading digital marketing strategy, he is transitioning his expertise to healthcare AI, driven by his experience as a chronic care patient and his commitment to ensuring AI serves all patients equitably. Dan holds AI certifications from Stanford, Wharton, and Google Cloud, grounding his strategic insights in comprehensive knowledge of AI governance frameworks, bias detection methodologies, and responsible AI principles. His work focuses on helping healthcare organizations implement AI systems that meet both regulatory requirements and ethical obligations—building governance structures that enable innovation while protecting patient safety and advancing health equity

Want help implementing responsible AI in your organization? Learn more about strategic advisory services at Viable Health AI