MedGemma: Comprehensive Analysis

MedGemma is Google DeepMind's groundbreaking collection of AI models specifically optimized for medical text and image comprehension. This is an executive summary covering the basics of this core part of medical AI.

This report will give you everything you need to understand this revolutionary technology and how it can accelerate the world of healthcare AI and medical innovation. More to come about Google's Co-Scientist, but this is a good start.

Bottom Line Up Front

MedGemma is Google DeepMind's groundbreaking collection of AI models specifically optimized for medical text and image comprehension, launched in May 2025 and representing a significant milestone in integrating AI into healthcare. After fine-tuning, MedGemma 4B achieves state-of-the-art performance on chest X-ray report generation with a RadGraph F1 score of 30.3, and 81% of its generated chest X-ray reports were judged by a US board-certified radiologist to be of sufficient accuracy for similar patient management. This is exactly the kind of technology that will define the next decade of healthcare AI.

Technical Overview: The Science Behind the Innovation

Architecture Foundation

MedGemma is built on the Gemma 3 decoder-only transformer architecture, specifically fine-tuned on diverse medical datasets to achieve state-of-the-art performance in healthcare AI applications. Training was conducted using JAX, allowing researchers to leverage the latest generation hardware including TPUs for faster and more efficient training of large models.

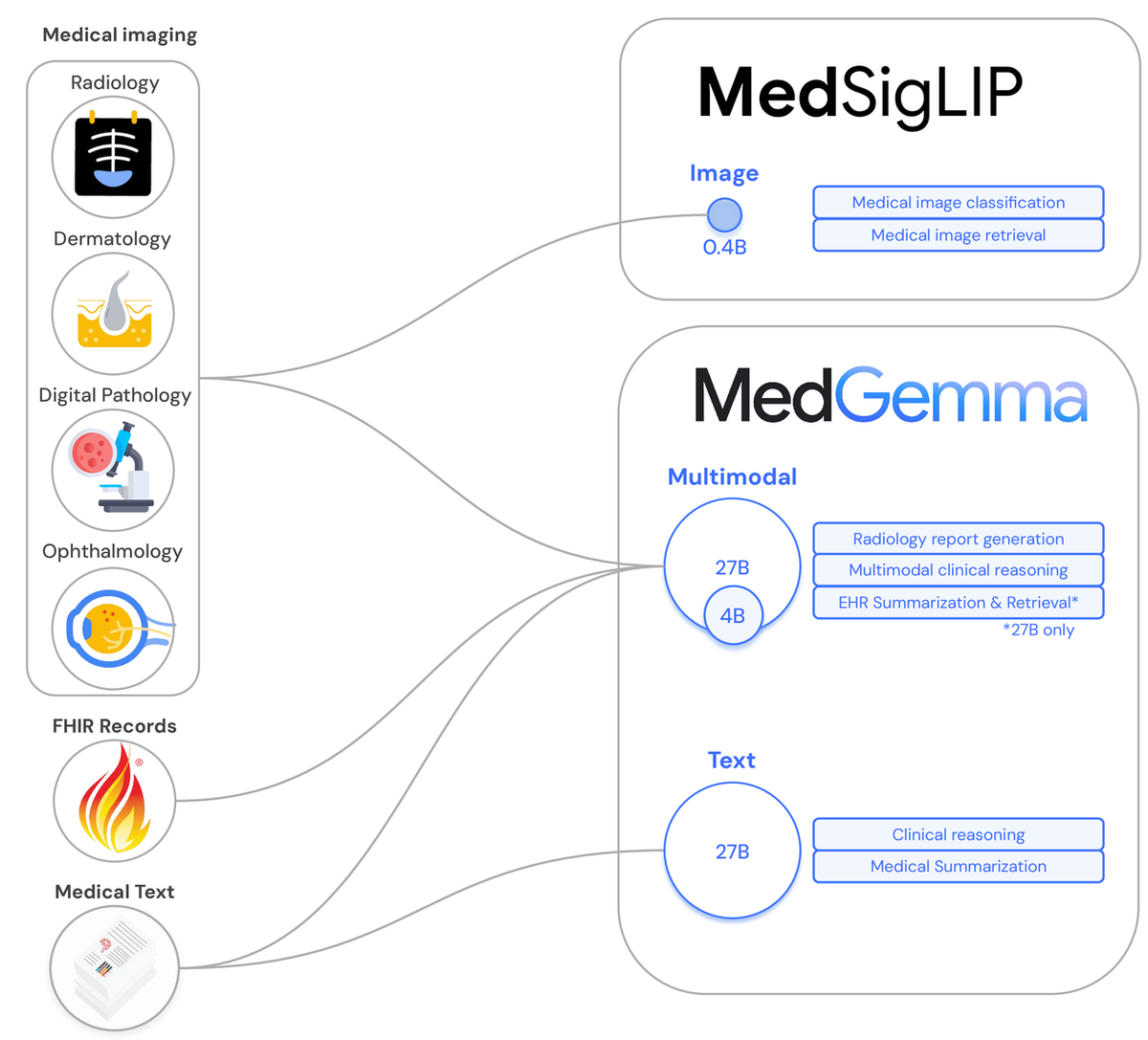

Two Powerful Variants

MedGemma 4B Multimodal:

- 4 billion parameters with SigLIP image encoder pre-trained on de-identified medical data including chest X-rays, dermatology images, ophthalmology images, and histopathology slides

- Scores 64.4% on MedQA, ranking among the best very small (<8B) open models

- Available in both pre-trained (-pt) and instruction-tuned (-it) versions

MedGemma 27B Text-Only:

- 27 billion parameters trained exclusively on medical text and optimized for inference-time computation

- Scores 87.7% on MedQA medical knowledge and reasoning benchmark, among the best performing small open models (<50B)

- Only available as instruction-tuned model

Revolutionary Training Data

The models were evaluated on over 22 datasets spanning multiple medical tasks and imaging modalities, with public datasets including MIMIC-CXR, Slake-VQA, PAD-UFES-20, and others, plus proprietary datasets used under license or participant consent.

Key Features and Capabilities

Medical Image Analysis

Adaptable for classifying medical images including radiology, digital pathology, fundus and skin images, generating medical image reports, and answering natural language questions about medical images.

Clinical Text Processing

Adaptable for use cases requiring medical knowledge including patient interviews, triaging, clinical decision support, and summarization.

Multimodal Integration

MedSigLIP, a lightweight 400M parameter image encoder using Sigmoid loss for Language Image Pre-training architecture, was adapted from SigLIP via tuning with diverse medical imaging data while retaining strong performance on natural images.

Performance Metrics and Scientific Validation

Benchmark Performance

MedGemma 4B outperforms the base Gemma 3 4B model across all tested multimodal health benchmarks, with performance evaluated using RadGraph F1 metric for chest X-ray report generation on MIMIC-CXR.

Clinical Validation

MedGemma provides strong baseline medical image and text comprehension for models of its size, with performance evaluated on clinically relevant benchmarks including open datasets and curated datasets, with focus on expert human evaluations.

Safety and Reliability

For all areas of safety testing, safe levels of performance were observed across categories of child safety, content safety, and representational harms, with minimal policy violations across both model sizes.

Implementation Guide: Your Technical Roadmap

Getting Started

MedGemma models are accessible on platforms like Hugging Face and Google Cloud, subject to Health AI Developer Foundations terms of use. You can run them locally for experimentation or deploy via Google Cloud for production-grade applications.

Installation Requirements

Minimum system requirements for running include GPU access, with the 27B model requiring Colab Enterprise for running without quantization.

Deployment Options

MedGemma can be deployed as highly available and scalable HTTPS endpoints on Vertex AI through Model Garden, ideal for production-grade online applications with low latency, high scalability and availability requirements.

Customization Techniques

MedGemma can be fine-tuned using LoRA (parameter-efficient fine-tuning technique) and used within agentic systems coupled with tools like web search, FHIR generators/interpreters, Gemini Live for bidirectional audio conversation, or Gemini 2.5 Pro for function calling or reasoning.

Real-World Applications: Where the Magic Happens

Diagnostic Support

Healthcare providers can implement MedGemma to create systems that assist with preliminary diagnosis, with radiologists using applications powered by MedGemma 4B to receive initial assessments of chest X-rays, highlighting potential areas of concern.

Medical Education

Medical institutions can develop interactive learning tools that help students understand the correlation between visual symptoms and clinical presentations.

Clinical Workflow Enhancement

Applications include medical image classification for radiology scans and dermatological images, medical image interpretation for generating reports or answering questions, and clinical text analysis for understanding and summarizing clinical notes.

Privacy-Preserving Healthcare

MedGemma can parse private health data locally before sending anonymized requests to centralized models, addressing privacy concerns and institutional policies.

Industry Impact and Future Prospects

Transformative Potential

MedGemma empowers developers to create applications that seamlessly integrate medical image and text analysis, enhancing capabilities of healthcare professionals and paving the way for future innovations.

Open Source Advantage

Because MedGemma is open, models can be downloaded, built upon, and fine-tuned to support developers' specific needs, offering flexibility and privacy by running on proprietary hardware and customization for high performance.

Democratization of Medical AI

MedGemma opens doors for developers, researchers, and healthcare innovators to build smarter, faster, and more customizable applications using open-source AI while maintaining control, efficiency, and privacy.

Current Limitations and Opportunities

Performance can vary depending on prompt structure, and models have not been evaluated for multi-turn conversations or multi-image inputs, presenting opportunities for future development.