Understanding ELSA

ELSA is an internal generative AI tool developed by the FDA. Its primary objective is to enhance the efficiency and speed of regulatory processes by assisting FDA...

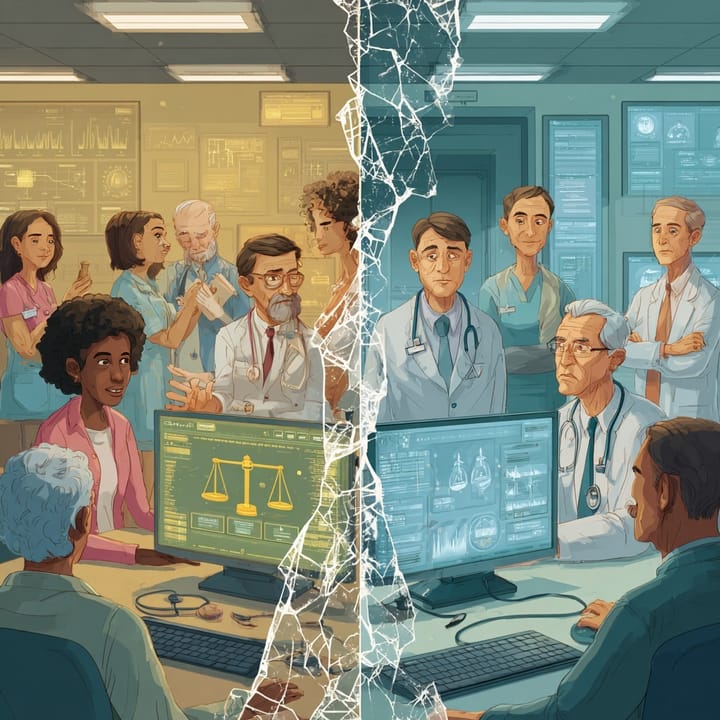

The U.S. Food and Drug Administration (FDA) officially launched its new generative Artificial Intelligence (AI) tool, ELSA (no official acronym has been released yet), on June 2, 2025. ELSA is designed to assist FDA staff in various core functions. ELSA represents a significant step in integrating AI into regulatory science. This series of briefs will summarize ELSA's purpose, its anticipated impact on patients, including myself, and provide a critical perspective on its advantages and disadvantages, incorporating scientific viewpoints from leading institutions such as Harvard Medical School, Massachusetts General Hospital, and Stanford University.

What is ELSA?

ELSA is an internal generative AI tool developed by the FDA. Its primary objective is to enhance the efficiency and speed of regulatory processes by assisting FDA staff with a range of tasks, including:

- Reviewing clinical protocols: Expediting the initial assessment of study designs.

- Summarizing adverse event reports: Quickly synthesizing large volumes of safety data.

- Comparing product labels: Identifying discrepancies or similarities across different product information.

- Identifying high-priority inspection targets: Using data to inform where regulatory oversight is most needed.

The FDA states that ELSA operates within a secure GovCloud environment and is designed not to train on industry-submitted data, aiming to maintain data integrity and security.

About Dan Noyes

Dan Noyes operates at the intersection of healthcare AI strategy and governance. After 25 years leading digital marketing strategy, he is transitioning his expertise to healthcare AI, driven by his experience as a chronic care patient and his commitment to ensuring AI serves all patients equitably. Dan holds AI certifications from Stanford, Wharton, and Google Cloud, grounding his strategic insights in comprehensive knowledge of AI governance frameworks, bias detection methodologies, and responsible AI principles. His work focuses on helping healthcare organizations implement AI systems that meet both regulatory requirements and ethical obligations—building governance structures that enable innovation while protecting patient safety and advancing health equity

Want help implementing responsible AI in your organization? Learn more about strategic advisory services at Viable Health AI