Beyond the Algorithm: What the Total Product Life Cycle Really Means for AI in Healthcare

When discussing the implementation of AI in healthcare, the conversation often begins and ends with model accuracy. However, the reality is far more complex and human. That’s why the FDA introduced the Total Product Life Cycle (TPLC) framework for AI: a comprehensive roadmap for translating AI products into clinical practice with safety, effectiveness, and trust at the core.

What the TPLC reminds us is this: an AI model is only one part of a much larger product. If it’s going to operate in a real-world care environment, we have to plan for everything that comes before and after the algorithm itself.

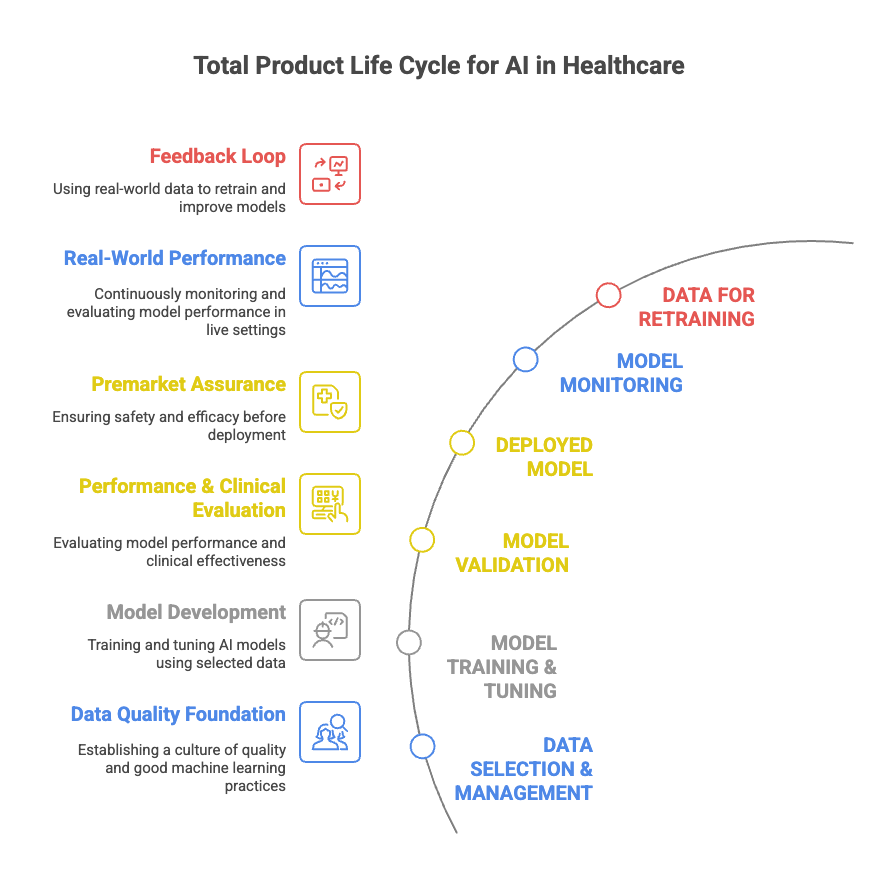

Let’s walk through the key phases of the TPLC for AI in healthcare:

1. Data Quality Foundation

Everything begins with data. We’re not just talking about having a lot of it — we’re talking about the right data, collected ethically and maintained with rigor. Poor data here often leads to poor outcomes later.

2. Model Development

This includes training and tuning the model using the selected data. At this stage, clinical utility, fairness, and relevance should already be top of mind. AI is never “just math” — it’s built from human choices.

3. Performance & Clinical Evaluation

Once the model performs well statistically, it still needs to demonstrate its effectiveness in the clinical domain. Does it generalize? Can clinicians trust it? Is it interpretable?

4. Premarket Assurance

Before deployment, the AI must be evaluated for safety and efficacy, not just accuracy. This includes regulatory considerations and often requires multidisciplinary input from ethicists, clinicians, and technical experts.

5. Deployed Model

At this point, the AI product enters the clinical ecosystem. But its journey is far from over — it now becomes part of clinical workflows, human decision-making, and organizational change.

6. Model Monitoring

Live data enters the system, and the model must be continuously tracked for performance, bias, and unexpected behavior. This is not optional — it’s a clinical safety requirement.

7. Feedback Loop & Retraining

Real-world performance leads to retraining, updates, or even complete overhauls. This is where many AI systems fail: they aren’t maintained, governed, or owned properly after deployment.

Six Questions to Ask Before You Deploy

Before you even begin the development process, TPLC encourages asking questions like:

- What is the clinical utility?

- Who are the stakeholders?

- Is your training data representative and up-to-date?

- Is your model interoperable with the real-world system?

- Will it interrupt or enhance clinical workflows?

- Who is responsible for ongoing maintenance?

The TPLC isn’t just a regulatory checklist. It’s a mindset — one that centers the patient, honors the clinician, and grounds AI in a real-world context. And in a healthcare system already strained by fragmentation and burnout, we owe it to everyone involved to build AI that’s not only smart, but safe, sustainable, and human-aware.